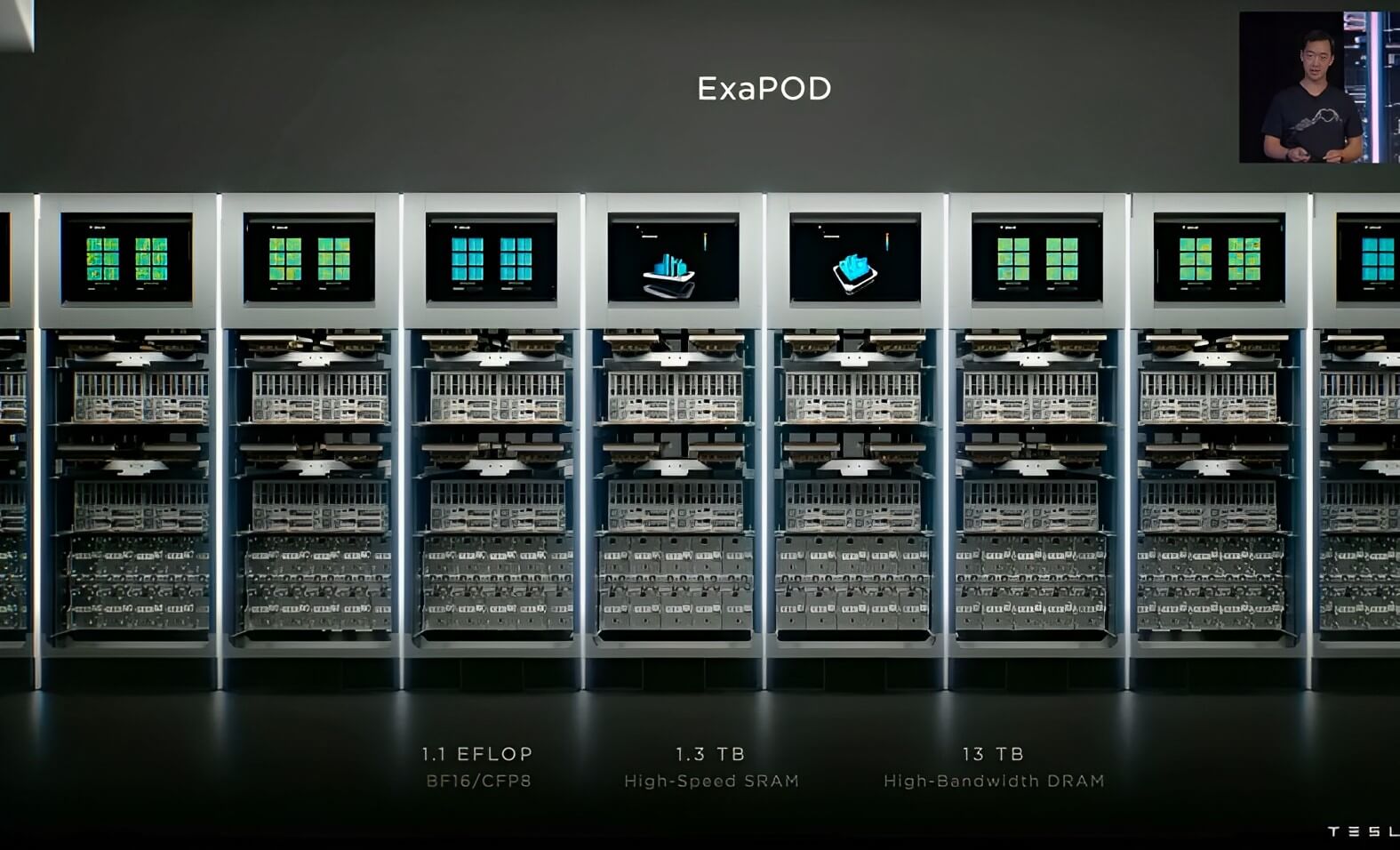

Tesla has recently made a groundbreaking move by activating a massive 10,000 unit NVIDIA H100 GPU cluster, aimed at accelerating the training of its Full Self-Driving (FSD) technology. This comes on the heels of the company bringing its in-house supercomputer, Dojo, into production last month. Both computational powerhouses are set to revolutionize Tesla’s approach to FSD and other AI-driven functionalities.

However, there seems to be some confusion regarding these two systems. While they are separate entities, they serve complementary roles in Tesla’s grand vision. Think of the NVIDIA H100 GPU cluster as akin to Apple using Intel chips—powerful but not fully optimized for Tesla’s specific needs. On the other hand, Dojo is like Apple’s in-house Silicon chips, hyper-optimized and custom-designed for Tesla’s unique requirements.

Together, they form a dual computational strategy that positions Tesla at the forefront of the automotive and tech industries.

Table of Contents

Tesla NVIDIA H100 GPU Cluster

By leveraging the NVIDIA H100 GPU cluster, Tesla aims to overcome the computational bottlenecks that have hindered the progress of FSD technology.

Activation and Significance

Tesla’s activation of the NVIDIA H100 GPU cluster is a monumental step in the company’s journey toward achieving full autonomy in its vehicles. This cluster, comprising a staggering 10,000 units, is not just a technological marvel but also a strategic move. It serves as an external powerhouse that significantly boosts Tesla’s computational capabilities, especially in the realm of Full Self-Driving (FSD) technology.

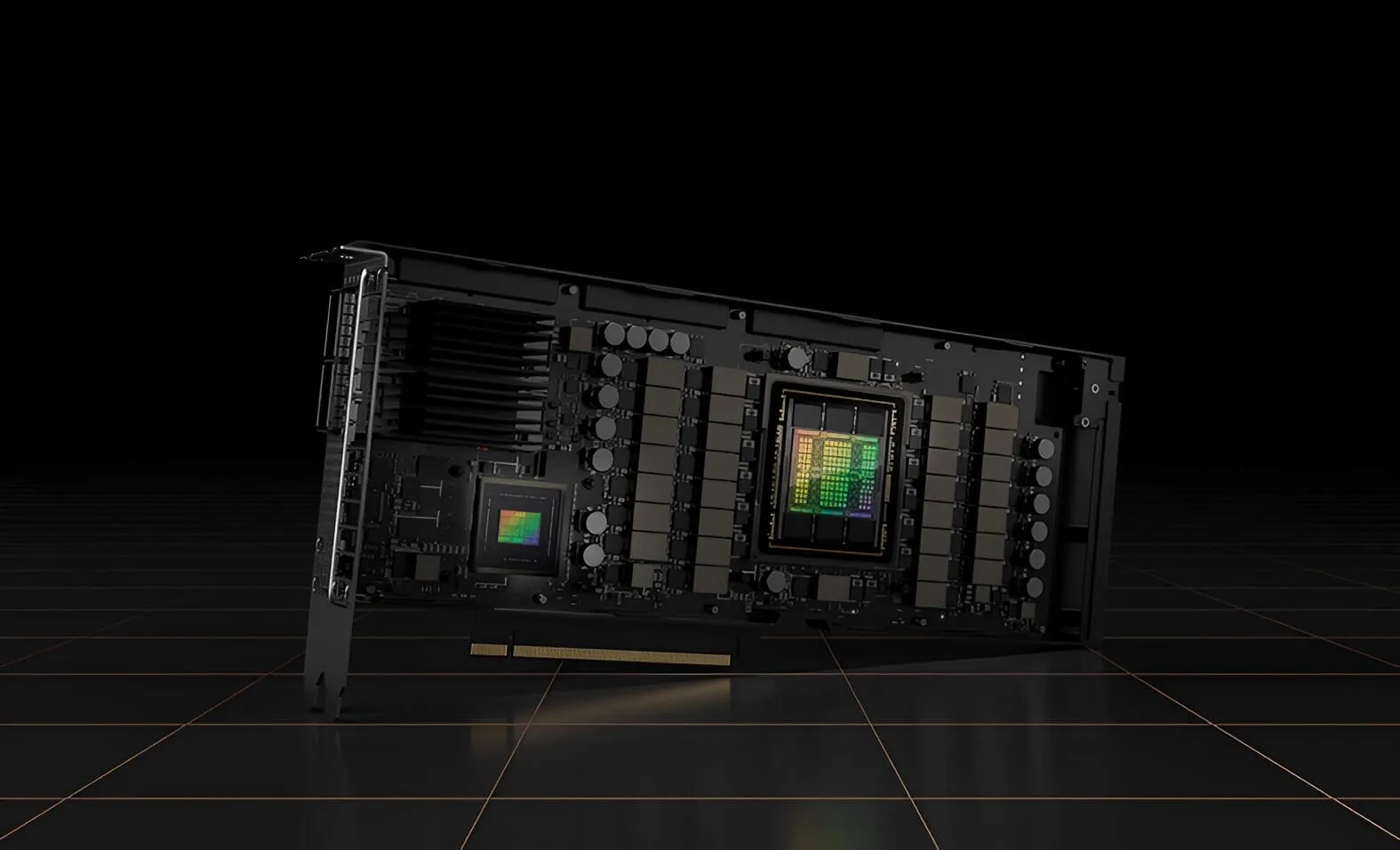

NVIDIA H100 Performance

The NVIDIA H100 GPUs are a force to be reckoned with. Launched in late 2022, these GPUs are up to 30 times faster than their predecessor, the A100, and offer up to 9 times faster performance specifically for AI training. With 18,432 CUDA cores, 640 tensor cores, and 80 streaming multiprocessors, the H100 is engineered for high-performance computing at an unprecedented scale.

Design Focus on Graphics-Intensive Workloads

What sets the H100 apart is its design focus on graphics-intensive workloads, making it an ideal choice for video training, a crucial aspect of FSD technology. The H100’s architecture allows for easy scalability, enabling Tesla to train complex neural networks more efficiently. However, it’s worth noting that the H100 comes with higher energy usage compared to the A100, a trade-off for its superior performance.

NVIDIA H100 vs. A100

While the A100 GPUs served Tesla well for a period, the new H100 GPUs offer a level of performance and specialization that is more aligned with Tesla’s current and future computational needs. The transition to the H100 is not just an upgrade; it’s a strategic move that will significantly accelerate the development and deployment of Tesla’s Full Self-Driving technology.

Historical Context and Launch Date

The NVIDIA A100 GPU was a significant milestone when it was launched in late 2020. It was designed to cater to high-performance computing and artificial intelligence workloads, serving as the backbone for many computational tasks across various industries, including automotive AI. Tesla has been using the A100 GPUs for the last couple of years to train its FSD algorithms, among other tasks.

NVIDIA A100 Technical Specifications

The A100 came with 6,912 CUDA cores, 432 tensor cores, and an option for either 40 GB or 80 GB of high-bandwidth memory (HBM2). These specifications made it a formidable choice for high-performance computing tasks at the time of its launch. However, technology evolves rapidly, and the A100’s specs have been significantly outpaced by the new H100.

Performance Differences

The H100 GPUs offer a quantum leap in performance compared to the A100. They are up to 30 times faster and provide up to 9 times faster performance for AI training tasks. Specifically, high-performance computing is over 5 times faster on the H100 compared to the A100. This is largely due to the H100’s superior technical specifications, including 18,432 CUDA cores and 640 tensor cores.

Additionally, the H100 is designed for graphics-intensive workloads, making it particularly well-suited for video training, a critical component of FSD technology.

Tomorrow, @Tesla will turn on a massive and very expensive 10,000 unit NVIDIA H100 GPU cluster to help it train FSD. But that got me wondering, what is the difference between these new H100 GPUs and the older A100 graphics processing units (GPUs) Tesla has been using for the last… pic.twitter.com/ZoCjR1SdjI

— Sawyer Merritt (@SawyerMerritt) August 28, 2023

The Apple-Intel Analogy

To fully grasp the relationship between Tesla’s use of NVIDIA’s H100 GPUs and its own Dojo supercomputer, it’s helpful to draw an analogy with Apple’s transition from Intel chips to its in-house designed Silicon chips. For years, Apple relied on Intel’s processors for its range of Mac computers. These chips were powerful and got the job done, but they were not fully optimized for Apple’s specific needs and vision.

Similarly, Tesla’s use of NVIDIA’s H100 GPUs can be likened to Apple’s use of Intel chips. The H100 GPUs are incredibly powerful and offer a significant boost in performance, especially in the realm of Full Self-Driving technology. However, they are not custom-designed to Tesla’s exact specifications and requirements.

While they provide a robust solution for high-performance computing tasks, they may not be fully optimized for the unique challenges and opportunities that Tesla’s FSD technology presents.

How Tesla Plans to Use the NVIDIA H100 GPU Cluster for FSD

The NVIDIA H100 GPU cluster is specifically designed to handle graphics-intensive workloads, making it an ideal choice for video training, a crucial component of FSD technology. With its high-performance computing capabilities, the H100 allows Tesla to train complex neural networks more efficiently and effectively.

This is particularly important for FSD, which requires vast amounts of data to be processed and learned from. By leveraging the H100’s superior performance metrics, Tesla aims to overcome computational bottlenecks and speed up the iterative process of machine learning, thereby bringing FSD technology closer to full maturity.

Benefits and Efficiencies of This Approach

Utilizing the NVIDIA H100 GPU cluster as part of a broader computational strategy offers several advantages:

- Scalability: The H100’s architecture is designed for easy scalability, allowing Tesla to expand its computational resources as needed.

- Speed: The H100 is up to 30 times faster than its predecessor, the A100, and offers up to 9 times faster performance for AI training tasks. This speed is crucial for processing the enormous datasets required for FSD.

- Specialization: The H100 is optimized for graphics-intensive tasks, making it particularly effective for the video training aspects of FSD.

- Resource Allocation: By offloading certain tasks to the H100, Tesla can more efficiently allocate its other computational resources, creating a more streamlined and effective development pipeline for FSD.

Financial Commitment and Future Plans

Tesla’s activation of the 10,000 unit NVIDIA H100 GPU cluster is not an isolated investment but part of a larger financial strategy aimed at advancing its FSD technology. The company’s commitment to this endeavor is evident in its planned spending for the coming years.

Planned Spending on AI and FSD Training

According to Elon Musk, Tesla is set to invest over $2 billion in 2023 alone on training compute for AI and FSD technologies. This financial commitment is expected to be repeated in 2024, underscoring the company’s long-term vision for autonomous driving. The investment is aimed at overcoming computational bottlenecks and accelerating the development of FSD, among other AI-driven functionalities.

Significance of Financial Commitment

This level of financial commitment is unprecedented in the automotive industry and highlights Tesla’s aggressive approach to achieving full autonomy. By allocating substantial resources to AI and FSD training, Tesla is not only looking to improve the performance and safety of its autonomous systems but also to secure a competitive edge in the rapidly evolving automotive landscape.

Future Plans

While the immediate focus is on leveraging the NVIDIA H100 GPU cluster for FSD, these financial commitments indicate that Tesla has a broader roadmap. The company is likely to continue investing in state-of-the-art computational resources and technologies to stay ahead of the curve. Although specifics are yet to be revealed, the substantial financial outlay for the next two years suggests that Tesla has a series of innovations and advancements in the pipeline.

Conclusion

The activation of Tesla’s 10,000 unit NVIDIA H100 GPU cluster is a monumental step in the company’s ongoing efforts to advance Full Self-Driving (FSD) technology. This powerful external resource is designed to tackle the computational bottlenecks that have been a challenge in the development of FSD, offering a significant boost in performance and capabilities.

By making this strategic move, along with substantial financial commitments for the coming years, Tesla is not only accelerating its own development timeline but also setting a new standard for the automotive industry. The company’s investment in cutting-edge computational resources like the H100 GPU cluster signals a future where autonomous driving technology could become a mainstream reality, reshaping our understanding of transportation and mobility.