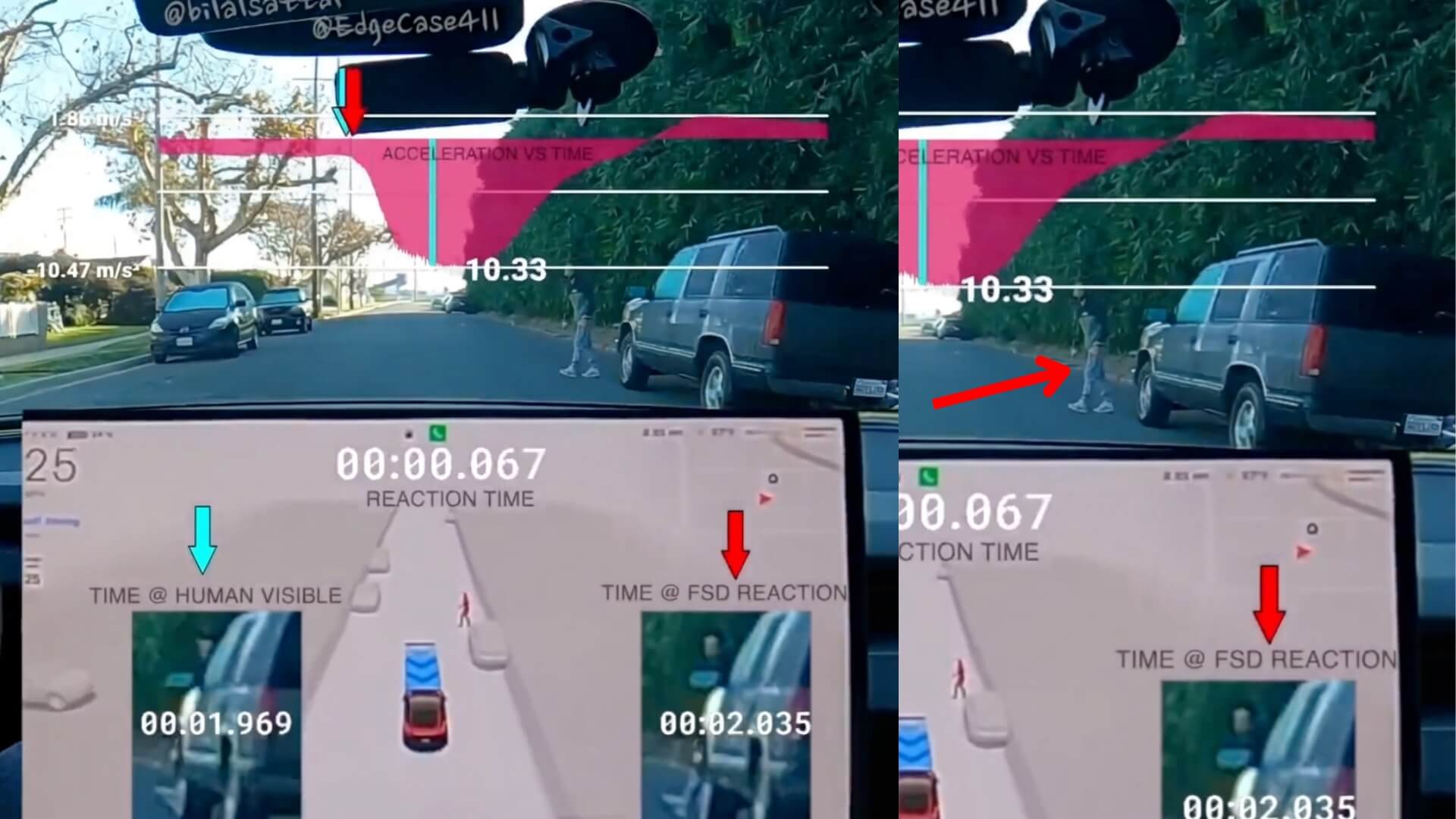

A X user posted a short video in which he seems to be demonstrating Tesla’s Full Self-Driving (Supervised) v14.2.1. In the video, a man unexpectedly appeared in front of a moving Tesla in a controlled test scenario, and the car responded in just 67 milliseconds, which is much faster than the human brain can process an unexpected event. The outcome was a safe, orderly stop that didn’t injure the pedestrian.

This one instance, which was caught on camera, demonstrates a significant advancement in autonomous driving technology. For many onlookers, it was both paradigm-shifting and impressive. In the past, we’ve seen just how advanced Tesla FSD Supervised can be, from saving lives to delivering remarkably human-like driving.

Seeing vs. Processing: The Speed Gap

The video demonstrates that the car’s neural network processed and responded to the danger almost immediately, but it took viewers about eight seconds to see and comprehend what had happened. This difference was just 8 seconds to understand, 67 milliseconds to react, which highlights a key benefit of machine perception. Even when they are at their most alert, humans usually take 200–250 milliseconds to respond to a visual stimulus.

That number can rise dramatically in real-world situations due to fatigue, distraction, glare, and bad weather. With the help of sophisticated neural networks and end-to-end training, Tesla’s FSD (Supervised) system is demonstrating the ability to execute braking decisions in a fraction of that time. The response time of 67 milliseconds is not merely quick. For humans, it is not biologically possible.

It takes 8 seconds to see it.

It takes 67ms to believe it.

FSD 14.2.1 reacts faster than you can blink.

Everyone is going to want this in their car.@Tesla_AI @elonmusk @bilalsattar pic.twitter.com/O7l8ckCPDX— Edge Case (@edgecase411) December 8, 2025

How Tesla Achieved a Near-Instant Reaction

Tesla’s v14 series represents Tesla’s latest move toward end-to-end AI architecture, which combines perception, planning, and control into a single neural network system. The system learns from billions of real-world driving miles instead of using conventional programming logic.

1. Vision-Based Neural Processing: A neural network that continuously predicts the visual environment receives input from Tesla’s eight-camera setup.

2. High-Frequency Decision Loop: FSD14.2.1 makes split-second braking decisions by processing driving actions at a very high frequency.

3. End-to-End Training Enhancements: Tesla enables much faster reactions by reducing the delay caused by information passing between disparate systems through the unification of perception and control.

4. Constant Real-World Learning: Every mile driven with FSD (Supervised) contributes to improving the network for all drivers, accelerating safety improvements.

This convergence of technologies produced the stunning 67-millisecond stop, which shows how software improvements alone can dramatically enhance vehicle safety.

What’s New in FSD v14.2?

Tesla’s “v14” FSD releases have been described by the company and its users as some of the largest neural-network and perception overhauls to date. Although Tesla does not report release notes on a granular level, describing all technical changes, drivers who have tried the system have reported several apparent improvements.

More humanlike steering behavior with turns, merges, and lane changes is said to be smoother and more confident. FSD v14.2 also seems to select better lanes earlier, particularly in complex interchanges.

The owners have also reported easier maneuvering around vehicles, construction areas, and uncommon traffic formations. Less artificial rhythm of driving. Acceleration and deceleration have been defined as being less robotic, more consistent with the normal human driving behaviors.