An unexpected event that sparked recent debate on the reliability of Tesla’s Full Self-Driving (FSD) system occurred when California tech founder Jesse Lyu witnessed his Tesla car inadvertently steered onto an active train track in Santa Monica. Several challenges and hazards of autonomous driving systems remain to be resolved even after they have appeared in the public domain as seen through the event in the video which is viral on many social sites.

Self-driving Tesla Mistakes Train Tracks for a Road and Steers Onto Them

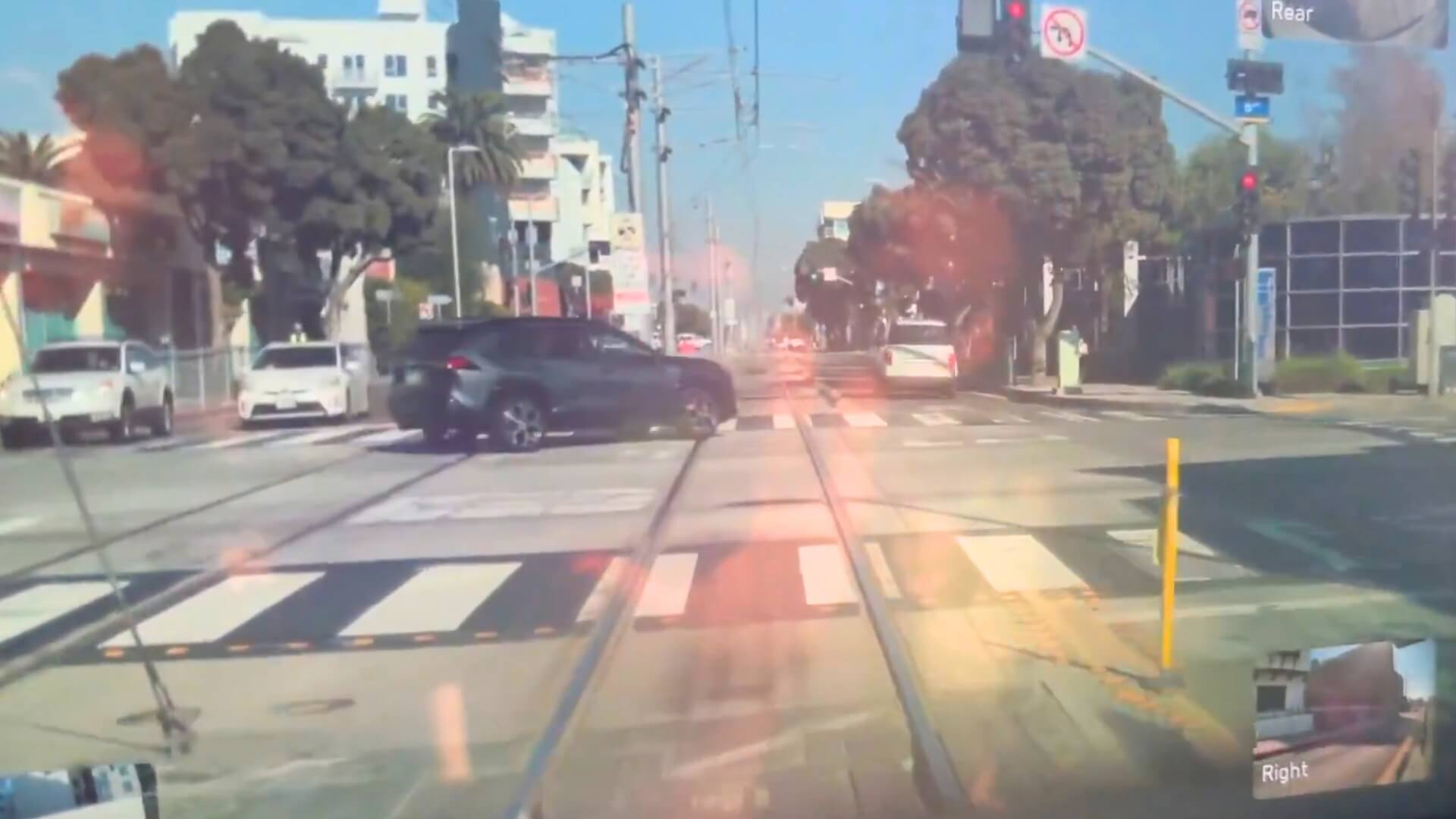

As Lyu explained, he was driving his Tesla on January 2, 2025, and decided to engage the FSD feature amidst traffic. Moments after making a left turn on Colorado Avenue the car considered the turn incorrectly and drove onto the tracks on the Los Angeles Metro E Line constructed for light rail trains.

They are slightly offset from the road and while they appear to be just regular lanes, they are marked for train use. The only way Lyu could proceed was between a concrete barrier on one side and a fence on the other, with a train coming towards his car from behind.

hi @elonmusk – FSD v13.2.2 just drove me to a train line here in Santa Monica. sending this video clips and hopefully you guys can fix it fast. (i was shaking and i had to ran a red light to save my life). pic.twitter.com/GOERJSEcTq

— Jesse Lyu (@jessechenglyu) January 2, 2025

In a video posted to X (formerly Twitter), Lyu expressed his alarm: “I’ve got nowhere to go… I could get killed because of this”. This he explained saying that he had to break the red light and dash through the road because of the incoming train danger. The recording became popular shortly, and millions of people watched the video and reacted to the harrowing close call.

Is Self-Driving Safe?

This is not the first time we have heard of Tesla’s facing such issues while on FSD or the Autopilot mode. A driver in another Model S in June 2024 also recounted that their vehicle recognized train tracks as roads and nearly rammed a freight train. Such events have made police departments in California, for instance, post alerts on citizens to stay alert while using these autopilot features for driving. Officials stress that FSD and Autopilot systems still do not turn cars into self-driving vehicles and drivers should always be prepared to take control.

Lyu’s story raises serious doubts about whether using Tesla Cain on Autopilot mode is safe and effective. Although he once boasted of using the FSD feature for extended road time with no harm, this event has shaken him and caused him to demand upgrades in the system. Then tweeted to Elon Musk urging Tesla to fix it. He described it as a ‘serious problem’ with its software.

Various automotive technology experts shared their opinions on this event and recommended that it shows that drivers should always be prepared to take control of the vehicle when using semi-autonomous features. Ross Merva, the owner of Tesla and repair specialist added that the systems can support driving but they cannot think on their own and will always need to alert people.

Conclusion

While relying on technology to deal with most of the control of the car, events like the one that befell Lyu remind Tesla of the dangers of relying on self-driving cars. The risk/reward equation still holds center stage when it comes to the job that manufacturers as well as consumers have to perform.

The recent investigations into self-driving cars have shown that technology and drivers must always be keen to enhance safety on roads and racetracks in California.