Recently, Tesla has expanded its Full Self Driving [FSD] Beta program to include more cars and their drivers. The autopilot is still not fully capable to drive the Teslas all by itself. Therefore, the company requires the driver to always be attentive. Tesla has very strict rules about driver etiquette when the drivers turn on the FSD feature. If Tesla owners do not follow this rule, the company kicks them out of the program. In spite of all these precautions, there is a good chance that Teslas with FSD Beta can crash.

Tesla Crash Report Claims Car Fought For Control

Tesla’s decision to test its “Full Self Driving” driver assistance software with untrained vehicle owners on public roads has attracted a massive amount of scrutiny and criticism. Throughout, the company has rolled out and retracted several software updates meant to upgrade the system while also addressing bugs in the software.

Tesla FSD Beta

There have been many video clips uploaded online showing Tesla owners using FSD beta, with varying degrees of success. Some clips show the driver assist system confidently handling complex driving scenarios, while others depict the car drifting into the wrong lane or making other serious mistakes.

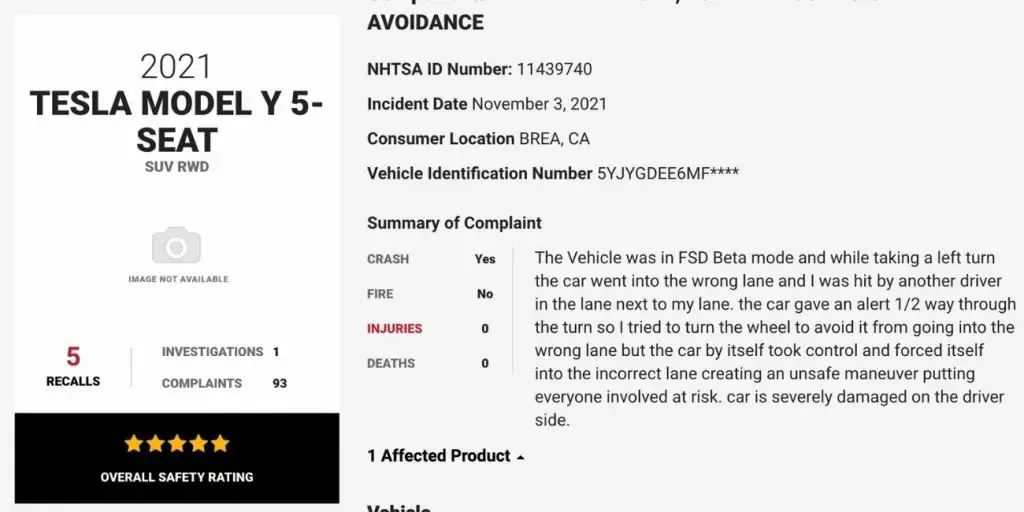

The National Highway Transportation Safety Authority [NHTSA] recently posted the first reported FSD Beta crash on their website. This incident occurred on November 3 2021 involving a Model Y. Thankfully, the incident didn’t injure anyone and no fires broke out. This incident isn’t as straightforward as it looks. It is quite strange that the complaint claimed that the driver attempted to take control of the vehicle while it was in FSD Beta, but the Model Y fought for control and forced itself into the incorrect lane.

The vehicle was in FSD Beta mode, and while taking a left turn, the car went into the wrong lane, and the driver was hit by another car in the lane next to my lane. The Model Y gave an alert 1/2 way through the turn, so the driver tried to turn the wheel to avoid it from going into the wrong lane, but the car by itself took control and forced itself into the incorrect lane creating an unsafe maneuver putting everyone involved at risk. The incident severely damaged the car on the driver’s side.

When the driver intervenes by moving the steering wheel, FSD Beta mode disengages. Many FSD Beta owners and Tesla owners have disputed the claims made in the report. The details of this crash are scarce. The report doesn’t even provide the location where the incident happened. No one has ever proven that Tesla would or even could fight for control of the vehicle.

NHTSA is yet to verify the claims made in the report. It has stated that it will remove all the complaints that it can’t verify. The complaint is still online, therefore details regarding the crash might also emerge.

Tesla has introduced an exhaustive procedure to be enrolled into the FSD program. It only selects the users who excel in these tests and procedures. Even after Tesla has selected a driver to be part of the program, it can remove him/her from the Beta mode if the software has flagged them more than once for driving that isn’t up to Tesla standards. This extremely aggressive enrollment procedure eliminates most of the drivers which Tesla deems unworthy for their FSD Beta mode.

Both Tesla and NHTSA will be scrutinizing the claims made in the report. Tesla is already under immense scrutiny from the Department of Transportation [DOT] and it can’t afford many setbacks. NHTSA is seeking information from Tesla regarding the Full Self Driving Mode in its vehicles. If claims made in this complaint prove to be true, then the DOT might force Tesla to roll back its Full Self Driving feature in Teslas. This will be a huge setback for autonomous mobility.

Our Thoughts

FSD feature doesn’t mean the car is fully autonomous. The driver still needs to be attentive. The growing number of self-driving cars on the roads means there are more chances of software errors or glitches which may lead to accidents. Authorities must heavily scrutinize all these incidents and find their root cause. This will help in solving the issue and also prevent further incidents. This will save lives of people too along with their time as autonomous driving gives people the necessary time they waste everyday while driving their commutes.